Exo2EgoDVC: Dense Video Captioning of Egocentric Procedural Activities Using Web Instructional Videos

概要

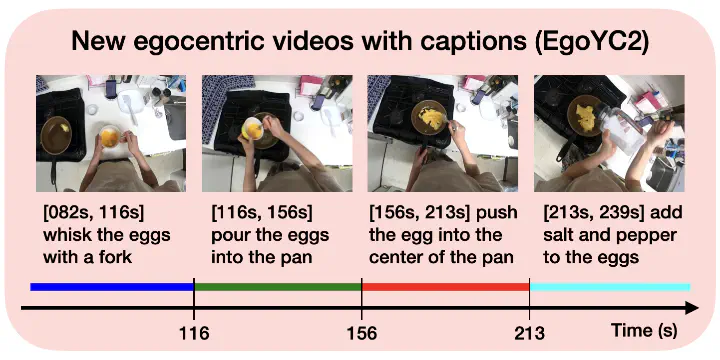

We propose a novel benchmark for cross-view knowledge transfer of dense video captioning, adapting models from web instructional videos with exocentric views to an egocentric view. While dense video captioning (predicting time segments and their captions) is primarily studied with exocentric videos (e.g., YouCook2), benchmarks with egocentric videos are restricted due to data scarcity. To overcome the limited video availability, transferring knowledge from abundant exocentric web videos is demanded as a practical approach. In this work, we first create a real-life egocentric dataset (EgoYC2) whose captions follow the definition of YouCook2 captions, enabling transfer learning between these datasets with access to their ground-truth. Given the view gaps between exocentric and egocentric videos, we propose a view-invariant learning method using adversarial training, which consists of pre-training and fine-tuning stages. We validate our proposed method by studying how effectively it overcomes the view gap problem and efficiently transfers the knowledge to the egocentric domain. Our benchmark will envision methodologies to describe egocentric videos in natural language.